what causes aws config to increase in price

Configure pools

This article explains the configuration options available when you create and edit a pool.

Pool size and auto termination

When you create a pool, in gild to command its size, you tin prepare three parameters: minimum idle instances, maximum capacity, and idle instance machine termination.

Minimum Idle Instances

The minimum number of instances the pool keeps idle. These instances exercise non cease, regardless of the setting specified in Idle Instance Auto Termination. If a cluster consumes idle instances from the pool, Databricks provisions additional instances to maintain the minimum.

Maximum Chapters

The maximum number of instances that the pool will provision. If set up, this value constrains all instances (idle + used). If a cluster using the pool requests more instances than this number during autoscaling, the request will fail with an INSTANCE_POOL_MAX_CAPACITY_FAILURE error.

This configuration is optional. Databricks recommend setting a value just in the following circumstances:

-

Yous accept an instance quota you must stay under.

-

You want to protect one set of piece of work from impacting another set of work. For instance, suppose your instance quota is 100 and y'all have teams A and B that need to run jobs. Y'all tin create pool A with a max 50 and pool B with max 50 and then that the 2 teams share the 100 quota fairly.

-

You demand to cap toll.

Idle Instance Auto Termination

The fourth dimension in minutes that instances above the value set in Minimum Idle Instances can exist idle before existence terminated by the puddle.

Instance types

A pool consists of both idle instances kept set up for new clusters and instances in use by running clusters. All of these instances are of the same instance provider type, selected when creating a pool.

A pool's instance type cannot be edited. Clusters attached to a pool utilize the same case blazon for the commuter and worker nodes. Different families of instance types fit different use cases, such as retentiveness-intensive or compute-intensive workloads.

Databricks e'er provides 1 year'south deprecation find before ceasing support for an instance type.

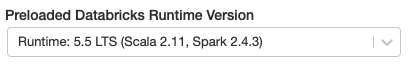

Preload Databricks Runtime version

You tin speed upwards cluster launches by selecting a Databricks Runtime version to be loaded on idle instances in the pool. If a user selects that runtime when they create a cluster backed by the pool, that cluster will launch fifty-fifty more quickly than a puddle-backed cluster that doesn't use a preloaded Databricks Runtime version.

Setting this pick to None slows downwardly cluster launches, every bit information technology causes the Databricks Runtime version to download on demand to idle instances in the pool. When the cluster releases the instances in the pool, the Databricks Runtime version remains buried on those instances. The next cluster creation operation that uses the same Databricks Runtime version might do good from this caching behavior, merely information technology is non guaranteed.

AWS configurations

When you configure a puddle's AWS instances you can cull the availability zone, the whether to apply spot instances and the max spot price, and the EBS volume type and size. All clusters fastened to the puddle inherit these configurations. To specify configurations, at the bottom of the pool configuration page, click the Instances tab.

Availability zones

Choosing a specific availability zone for a puddle is useful primarily if your organization has purchased reserved instances in specific availability zones. Read more about AWS availability zones.

Spot instances

You can specify whether to use spot instances and the max spot price to utilise when launching spot instances as a percent of the corresponding on-demand toll. Past default, Databricks sets the max spot cost at 100% of the on-demand cost. Come across AWS spot pricing.

A pool can either be all spot instances or all on-demand instances.

EBS volumes

This section describes the default EBS volume settings for pool instances.

Default EBS volumes

Databricks provisions EBS volumes for every instance equally follows:

-

A 30 GB unencrypted EBS instance root volume used simply past the host operating system and Databricks internal services.

-

A 150 GB encrypted EBS container root volume used past the Spark worker. This hosts Spark services and logs.

-

(HIPAA only) a 75 GB encrypted EBS worker log book that stores logs for Databricks internal services.

Add EBS shuffle volumes

To add shuffle volumes, select General Purpose SSD in the EBS Book Type driblet-down listing:

By default, Spark shuffle outputs get to the instance local deejay. For instance types that do non have a local disk, or if you want to increase your Spark shuffle storage space, you tin specify additional EBS volumes. This is peculiarly useful to prevent out of disk infinite errors when you run Spark jobs that produce large shuffle outputs.

Databricks encrypts these EBS volumes for both on-demand and spot instances. Read more about AWS EBS volumes.

AWS EBS limits

Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all instances in all pools. For data on the default EBS limits and how to alter them, see Amazon Rubberband Block Store (EBS) Limits.

Autoscaling local storage

If you don't want to allocate a stock-still number of EBS volumes at pool creation time, employ autoscaling local storage. With autoscaling local storage, Databricks monitors the amount of free disk space available on your pool'due south Spark workers. If a worker begins to run too low on deejay, Databricks automatically attaches a new EBS volume to the worker before it runs out of disk space. EBS volumes are attached up to a limit of five TB of total deejay space per instance (including the case's local storage).

To configure autoscaling storage, select Enable autoscaling local storage in the Autopilot Options:

The EBS volumes attached to an instance are discrete just when the instance is returned to AWS. That is, EBS volumes are never detached from an instance as long as it is in the pool. To scale down EBS usage, Databricks recommends configuring the Pool size and auto termination.

Notation

-

Databricks uses Throughput Optimized HDD (st1) to extend the local storage of an case. The default AWS chapters limit for these volumes is 20 TiB. To avert hitting this limit, administrators should request an increase in this limit based on their usage requirements.

-

If you want to utilise autoscaling local storage, the IAM function or keys used to create your business relationship must include the permissions

ec2:AttachVolume,ec2:CreateVolume,ec2:DeleteVolume, andec2:DescribeVolumes. For the complete list of permissions and instructions on how to update your existing IAM role or keys, run into Configure your AWS business relationship (cross-account IAM role).

brennansullumeent.blogspot.com

Source: https://docs.databricks.com/clusters/instance-pools/configure.html

0 Response to "what causes aws config to increase in price"

Post a Comment